In this article, we are going to examine the speech-to-text capabilities of OpenAI’s automatic speech recognition model – Whisper, and its API. To demonstrate, we will transcribe a podcast(mp3 file) to text, and use OpenAI’s ChatGPT API to summarize the text.

Mantium is the fastest way to achieve step one in the AI pipeline with automated, synced data preparation that gets your data cleaned and ready for use. Visit our website to learn more.

Understanding Speech-to-text

Speech-to-text technology, commonly referred to as Automatic Speech Recognition (ASR), enables computers to identify and convert spoken language into text. Although it has been around for a while, current developments in machine learning and artificial intelligence have increased its accuracy and accessibility. Speech-to-text technology has many potential benefits, including downstream data generation & transformation, improved accessibility for people with disabilities, increased data entry and transcription efficiency, and enhanced communication capabilities in various settings.

The fundamental concept underlying speech-to-text technology is to analyze audio input using algorithms and statistical models to identify uttered words and phrases. This is accomplished by splitting the audio signal into smaller parts, such as individual sounds or phonemes, and comparing those components with a large database of recognized words and language patterns.

After transcribing speech to text, you can use it for various purposes, including creating closed captions for videos, enabling hands-free conversations on mobile devices and smart speakers, and giving access to audio information for people with hearing impairments.

About Whisper Model

Whisper is a state-of-the-art automatic speech recognition model developed by OpenAI. It leverages web-scale text from the internet for training machine learning systems. The main focus of the Whisper approach is to simplify the speech recognition pipeline by removing the need for a separate inverse text normalization step to produce naturalistic transcriptions.

The Whisper model employs a minimalist approach to data pre-processing and trains to predict the raw text of transcripts without applying significant standardization. The training process relies on the expressiveness of sequence-to-sequence models to learn to map between utterances and their transcribed forms.

This approach allows a diverse dataset covering a broad audio distribution from various environments, recording setups, speakers, and languages. The OpenAI team developed several automated filtering methods to enhance the training dataset’s quality.

In addition, to avoid learning “transcript-ese,” the OpenAI team developed many heuristics to detect and remove machine-generated transcripts from the training dataset. In this context, the term “transcript-ese” describes the phenomenon of ASR-generated transcripts that are not naturalistic and do not accurately reflect how humans speak. Many transcripts on the internet are not human-generated but the output of existing ASR systems.

Whisper uses an audio language detector to ensure that the spoken language matches the language of the transcript. If the two do not match, the (audio, transcript) pair is not included in the dataset as a speech recognition training example. The team also performs de-duplication at a transcript level between the training dataset and the evaluation datasets to avoid contamination.

The model architecture of Whisper is an encoder-decoder Transformer. The model can perform many different tasks on the same input audio signal, such as transcription, translation, voice activity detection, alignment, and language identification.

Example – Summarizing Large Texts from Podcast Transcriptions Using Whisper and ChatGPT API

In this tutorial, we will learn how to transcribe a podcast(audio file) into text and then use OpenAI’s ChatGPT API (GPT-3.5 Turbo model) to summarize the transcription. We will leverage OpenAI’s API to process the text and save the summarized output to a file.

It’s important to note that GPT-3.5 Turbo has a token limit of 4097 tokens per API call. To handle large texts, we need to ensure that the text is split into smaller chunks, each within the token limit. This is why we will implement a token counter to measure the number of tokens in the input and split it accordingly.

With Mantium, you can load audio data using our connector, preprocess and perform different transformations, all with a few clicks of the button. Visit our website to get started.

Audio file

The podcast that we are going to use in this tutorial is a podcast from Apple Podcast, titled – Surviving ChatGPT with Christian Hubicki by Software Engineering Daily. We’ve already downloaded the mp3 file for the purpose of this tutorial.

Step 1: Import required libraries

First, import the necessary libraries:

import openai

import tiktoken

import whisperStep 2: Transcribe the podcast using Whisper

We will now load the whisper model and transcribe the podcast. The transcribed text will be saved to a file. The “base” is the Whisper model name for the version with the 74 million parameters.

model = whisper.load_model("base")

# Replace /path/to/your/podcast/file.mp3 with the path to

# the podcast file you want to transcribe.

result = model.transcribe("/path/to/your/podcast/file.mp3")

print(result["text"])

article = result["text"]

# Write the transcripts to a file

with open("transcripts.txt", "w") as f:

f.write(result["text"])

f.write("\n")

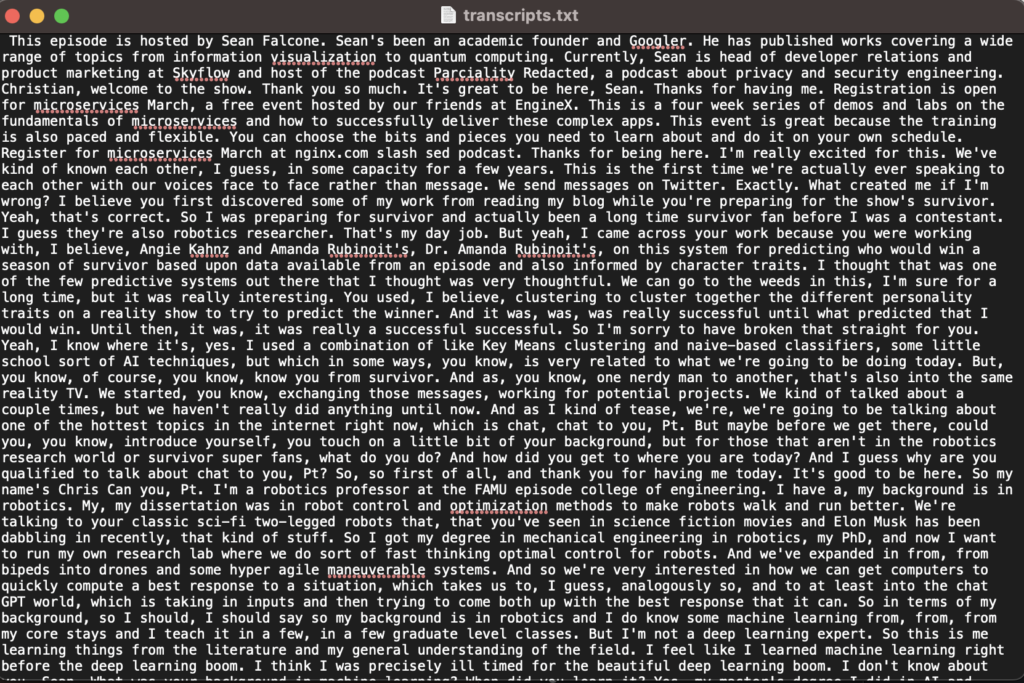

f.close()Here is an image of the transcribed text file.

Step 3: Define helper functions

We will define the following helper functions:

1. count_tokens(): Calculates the number of tokens in a given text.

def count_tokens(text):

encoding = tiktoken.get_encoding("cl100k_base")

num_tokens = len(encoding.encode(text))

return num_tokensNote that the encoding model cl100k_base is for only the GPT-3.5-Turbo model, if you are using another model, here is a list of OpenAI models supported by tiktoken.

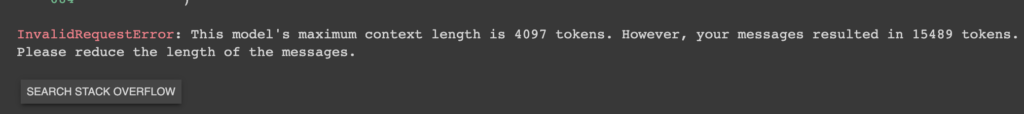

2. process_input_text(): The helper function is responsible for processing the given input_text and generating a summarized version by splitting it into smaller chunks. It splits the text into chunks based on the maximum allowed tokens. This function is crucial for handling large texts and ensuring that each chunk is within the token limit, ensuring that we do not exceed the token limit per API call, as shown by the error message below.

"InvalidRequestError: This model's maximum context length is 4097 tokens. However, your messages resulted in 15489 tokens. Please reduce the length of the messages."

The transcribed file is 15489 tokens as shown above. Let’s look at the preprocessing code below to ensure we don’t go over the maximum token allowance.

def process_input_text(input_text, max_tokens, api_key):

openai.api_key = api_key

num_tokens, tokens = count_tokens(input_text)

chunks = []

for t in range(0, num_tokens, max_tokens):

chunks.append(tokens[t:t+max_tokens])

summarized_text = ""

total_tokens_input_text = 0

total_tokens_summary = 0

num_chunks = 0

for chunk in chunks:

chunk_text = encoding.decode(chunk)

print(f"Current chunk: {chunk_text}")

summary = generate_summary(chunk_text)

summarized_text += summary

total_tokens_input_text += count_tokens(chunk_text)[0]

total_tokens_summary += count_tokens(summary)[0]

num_chunks += 1

return summarized_text, total_tokens_input_text, total_tokens_summary, num_chunks

Notice that we have three variables in the code to track the total tokens of the input_text, and summarized text, and the total number of chunks.

3. generate_summary(): Sends a request to the OpenAI API and receives a summarized text as a response. We added the ChatGPT API prompt to generate the summary. If you are not familiar, check out our blog that introduces the topic.

def generate_summary(input_text, model="gpt-3.5-turbo", temperature=0):

system_content = "You are an helpful summarizer. Summary exactly the content"

# Buffer tokens to account for the model's context length

buffer_tokens = 50

# Calculate the maximum user tokens allowed

max_user_tokens = 4097 - count_tokens(system_content)[0] - buffer_tokens

user_tokens = count_tokens(input_text)[1][:max_user_tokens]

user_content = encoding.decode(user_tokens)

completion = openai.ChatCompletion.create(

model=model,

messages=[

{"role": "system", "content": system_content},

{"role": "user", "content": user_content}

],

temperature=temperature

)

return completion["choices"][0]["message"]["content"]In the code above, we have the max_user_tokens variable that calculates the maximum number of user tokens allowed. The model has a total token limit of 4097 tokens. It subtracts the number of tokens in the system content and the buffer tokens from the total token limit to get the maximum user tokens allowed. While buffer_tokens are additional tokens reserved to ensure that the model does not exceed its maximum token limit when generating responses. This prevents potential errors that may occur due to exceeding the token limit.

Step 4: Main function

Now, we’ll implement the main function, which will:

- Set up the API key

- Define the input text (the transcribed podcast)

- Process the input text and generate the summarized text

- Save the summarized text to a file

if __name__ == "__main__":

api_key = "api-key"

openai.api_key = api_key

input_text = result["text"]

max_tokens = 4097

summarized_text, total_tokens_input_text, total_tokens_summary, num_chunks = process_input_text(input_text, max_tokens, api_key)

print(f"Total tokens in input_text: {total_tokens_input_text}")

print(f"Total tokens in summarized text: {total_tokens_summary}")

print(f"Number of chunks processed: {num_chunks}")

print(summarized_text)

with open("summary.txt", "w") as file:

file.write(summarized_text)

file.close()Replace “your_openai_api_key” with your actual API key.

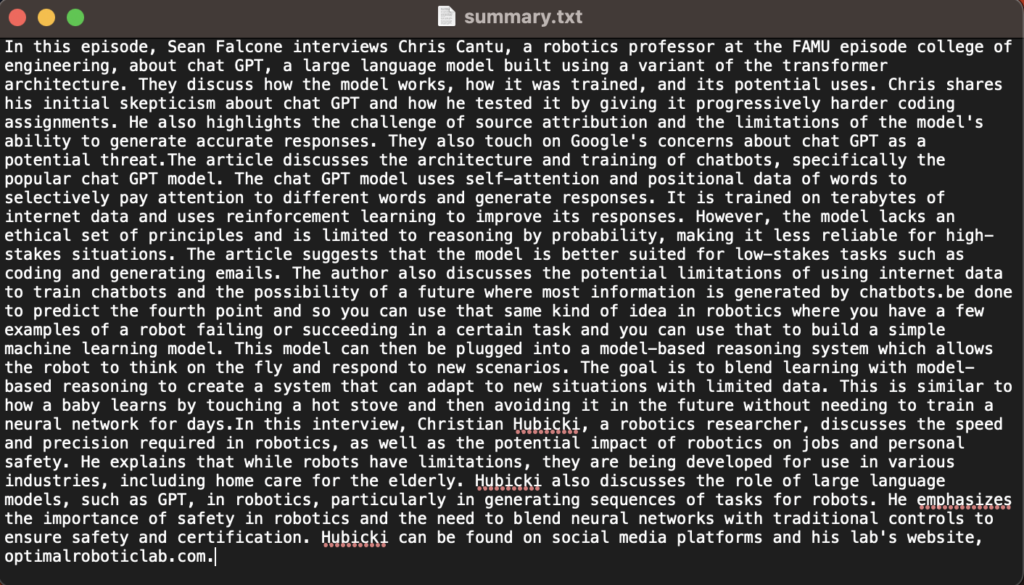

From the image above we can see the following metrics, as it explains why we needed to split the input_text before generating the summary;

- Total tokens in input_text: 15450

- Total tokens in summarized text: 509

- Number of chunks processed: 5

Here is the summary of the whole episode(one hour, sixteen minutes of audio).

Here is the full code together

import tiktoken

import openai

# Set encoding as a global variable

encoding = tiktoken.get_encoding("cl100k_base")

def count_tokens(text):

tokens = encoding.encode(text)

num_tokens = len(tokens)

return num_tokens, tokens

def generate_summary(input_text, model="gpt-3.5-turbo", temperature=0):

system_content = "You are an expert at making strong factual summarizations. " \

"Take the transcribed text submitted by the user and produce a factual useful summary."\

"Please provide a detailed summary, that is well formatted." \

"Do not include anything that is not in the included text"

# Buffer tokens to account for the model's context length

buffer_tokens = 50

# Calculate the maximum user tokens allowed

max_user_tokens = 4097 - count_tokens(system_content)[0] - buffer_tokens

user_tokens = count_tokens(input_text)[1][:max_user_tokens]

user_content = encoding.decode(user_tokens)

completion = openai.ChatCompletion.create(

model=model,

messages=[

{"role": "system", "content": system_content},

{"role": "user", "content": user_content}

],

temperature=temperature

)

return completion["choices"][0]["message"]["content"]

def process_input_text(input_text, max_tokens, api_key):

openai.api_key = api_key

num_tokens, tokens = count_tokens(input_text)

chunks = []

for t in range(0, num_tokens, max_tokens):

chunks.append(tokens[t:t+max_tokens])

summarized_text = ""

total_tokens_input_text = 0

total_tokens_summary = 0

num_chunks = 0

for chunk in chunks:

chunk_text = encoding.decode(chunk)

print(f"Current chunk: {chunk_text}")

summary = generate_summary(chunk_text)

summarized_text += summary

total_tokens_input_text += count_tokens(chunk_text)[0]

total_tokens_summary += count_tokens(summary)[0]

num_chunks += 1

return summarized_text, total_tokens_input_text, total_tokens_summary, num_chunks

if __name__ == "__main__":

api_key = "api-key"

openai.api_key = api_key

input_text = result["text"]

max_tokens = 4097

summarized_text, total_tokens_input_text, total_tokens_summary, num_chunks = process_input_text(input_text, max_tokens, api_key)

print(f"Total tokens in input_text: {total_tokens_input_text}")

print(f"Total tokens in summarized text: {total_tokens_summary}")

print(f"Number of chunks processed: {num_chunks}")

print(summarized_text)

with open("summary.txt", "w") as file:

file.write(summarized_text)

file.close()Conclusion

In conclusion, this article explored OpenAI’s Whisper, an automatic speech recognition model, and its API to transcribe and ChatGPT API to summarize podcast content. We learned about the fundamentals of speech-to-text technology as well as how the Whisper model was trained, including its architecture.

We demonstrated how to transcribe a podcast into text using the Whisper model and summarized the transcriptions using OpenAI’s ChatGPT API. We also discussed the importance of token limitations and handling large texts by splitting them into smaller chunks.

By leveraging the power of both Whisper and ChatGPT, we can transform large amounts of spoken content into summarized written text, opening up new possibilities for accessibility, data analysis, and content creation.

What we are doing at Mantium

One takeaway from this tutorial is that audio integration is an important data source, but why? It can provide valuable insights that other sources may not easily glean.

In addition, working with audio can be difficult, especially in production settings, due to the need to preprocess the audio to be in an acceptable, efficient format for a model.

However, with Mantium’s audio data integration, you won’t need to write lengthy code to preprocess audio and add transformations such as summaries. At Mantium, we are building connectors that will make it easy to load audio files, e.g. from Zoom meetings, perform transcription, transformation such as summarization, and other capabilities, all with a few clicks of a button. Visit our website to learn more.

References

Subscribe to the Mantium Blog

SubscribeMost recent posts

Enjoy what you're reading?

Subscribe to our blog to keep up on the latest news, releases, thought leadership, and more.